Markov Chains

Markov Chains

1.I.11H

Part IB, 2004Let be a transition matrix. What does it mean to say that is (a) irreducible, recurrent?

Suppose that is irreducible and recurrent and that the state space contains at least two states. Define a new transition matrix by

Prove that is also irreducible and recurrent.

1.II.22H

Part IB, 2004Consider the Markov chain with state space and transition matrix

Determine the communicating classes of the chain, and for each class indicate whether it is open or closed.

Suppose that the chain starts in state 2 ; determine the probability that it ever reaches state 6 .

Suppose that the chain starts in state 3 ; determine the probability that it is in state 6 after exactly transitions, .

2.I.11H

Part IB, 2004Let be an irreducible, positive-recurrent Markov chain on the state space with transition matrix and initial distribution , where is the unique invariant distribution. What does it mean to say that the Markov chain is reversible?

Prove that the Markov chain is reversible if and only if for all .

2.II.22H

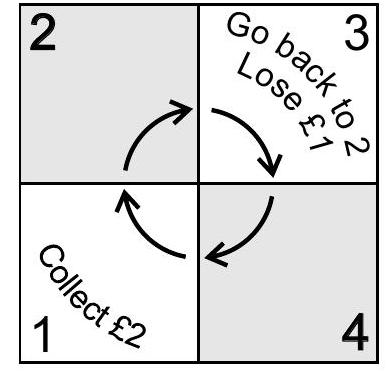

Part IB, 2004Consider a Markov chain on the state space with transition probabilities as illustrated in the diagram below, where and .

For each value of , determine whether the chain is transient, null recurrent or positive recurrent.

When the chain is positive recurrent, calculate the invariant distribution.

1.II.19D

Part IB, 2005Every night Lancelot and Guinevere sit down with four guests for a meal at a circular dining table. The six diners are equally spaced around the table and just before each meal two individuals are chosen at random and they exchange places from the previous night while the other four diners stay in the same places they occupied at the last meal; the choices on successive nights are made independently. On the first night Lancelot and Guinevere are seated next to each other.

Find the probability that they are seated diametrically opposite each other on the th night at the round table, .

2.II.20D

Part IB, 2005Consider a Markov chain with state space and transition probabilities given by

with , otherwise, where and .

For each , let

that is, the probability that the chain ever hits the state 0 given that it starts in state . Write down the equations satisfied by the probabilities and hence, or otherwise, show that they satisfy a second-order recurrence relation with constant coefficients. Calculate for each .

Determine for each value of , whether the chain is transient, null recurrent or positive recurrent and in the last case calculate the stationary distribution.

[Hint: When the chain is positive recurrent, the stationary distribution is geometric.]

3.I.9D

Part IB, 2005Prove that if two states of a Markov chain communicate then they have the same period.

Consider a Markov chain with state space and transition probabilities determined by the matrix

Identify the communicating classes of the chain and for each class state whether it is open or closed and determine its period.

4.I.9D

Part IB, 2005Prove that the simple symmetric random walk in three dimensions is transient.

[You may wish to recall Stirling's formula: ]

1.II.19C

Part IB, 2006Explain what is meant by a stopping time of a Markov chain . State the strong Markov property.

Show that, for any state , the probability, starting from , that makes infinitely many visits to can take only the values 0 or 1 .

Show moreover that, if

then makes infinitely many visits to with probability

2.II.20C

Part IB, 2006Consider the Markov chain on the integers whose non-zero transition probabilities are given by and

(a) Show that, if , then hits 0 with probability .

(b) Suppose now that . Show that, with probability 1 , as either or .

(c) In the case compute as .

3.I.9C

Part IB, 2006A hungry student always chooses one of three places to get his lunch, basing his choice for one day on his gastronomic experience the day before. He sometimes tries a sandwich from Natasha's Patisserie: with probability this is delicious so he returns the next day; if the sandwich is less than delicious, he chooses with equal probability either to eat in Hall or to cook for himself. Food in Hall leaves no strong impression, so he chooses the next day each of the options with equal probability . However, since he is a hopeless cook, he never tries his own cooking two days running, always preferring to buy a sandwich the next day. On the first day of term the student has lunch in Hall. What is the probability that 60 days later he is again having lunch in Hall?

[ Note .]

4.I.9C

Part IB, 2006A game of chance is played as follows. At each turn the player tosses a coin, which lands heads or tails with equal probability . The outcome determines a score for that turn, which depends also on the cumulative score so far. Write for the cumulative score after turns. In particular . When is odd, a head scores 1 but a tail scores 0 . When is a multiple of 4 , a head scores 4 and a tail scores 1 . When is even but is not a multiple of 4 , a head scores 2 and a tail scores 1 . By considering a suitable four-state Markov chain, determine the long run proportion of turns for which is a multiple of 4 . State clearly any general theorems to which you appeal.

1.II.19C

Part IB, 2007Consider a Markov chain on states with transition matrix , where , so that 0 and are absorbing states. Let

be the event that the chain is absorbed in 0 . Assume that for .

Show carefully that, conditional on the event is a Markov chain and determine its transition matrix.

Now consider the case where , for . Suppose that , and that the event occurs; calculate the expected number of transitions until the chain is first in the state 0 .

2.II.20C

Part IB, 2007Consider a Markov chain with state space and transition matrix given by

and otherwise, where .

For each value of , determine whether the chain is transient, null recurrent or positive recurrent, and in the last case find the invariant distribution.

3.I.9C

Part IB, 2007Consider a Markov chain with state space and transition matrix

where and .

Calculate for each .

4.I.9C

Part IB, 2007For a Markov chain with state space , define what is meant by the following:

(i) states communicate;

(ii) state is recurrent.

Prove that communication is an equivalence relation on and that if two states communicate and is recurrent then is recurrent.

1.II.19H

Part IB, 2008The village green is ringed by a fence with fenceposts, labelled . The village idiot is given a pot of paint and a brush, and started at post 0 with instructions to paint all the posts. He paints post 0 , and then chooses one of the two nearest neighbours, 1 or , with equal probability, moving to the chosen post and painting it. After painting a post, he chooses with equal probability one of the two nearest neighbours, moves there and paints it (regardless of whether it is already painted). Find the distribution of the last post unpainted.

2.II.20H

Part IB, 2008A Markov chain with state-space has non-zero transition probabilities and

Prove that this chain is recurrent if and only if

Prove that this chain is positive-recurrent if and only if

3.I.9H

Part IB, 2008What does it mean to say that a Markov chain is recurrent?

Stating clearly any general results to which you appeal, prove that the symmetric simple random walk on is recurrent.

4.I.9H

Part IB, 2008A Markov chain on the state-space has transition matrix

Classify the chain into its communicating classes, deciding for each what the period is, and whether the class is recurrent.

For each say whether the exists, and evaluate the limit when it does exist.

Paper 3, Section I, H

Part IB, 2009Let be a simple random walk on the integers: the random variables are independent, with distribution

where , and . Consider the hitting time or , where is a given integer. For fixed define for . Show that the satisfy a second-order difference equation, and hence find them.

Paper 4, Section I, H

Part IB, 2009In chess, a bishop is allowed to move only in straight diagonal lines. Thus if the bishop stands on the square marked in the diagram, it is able in one move to reach any of the squares marked with an asterisk. Suppose that the bishop moves at random around the chess board, choosing at each move with equal probability from the squares it can reach, the square chosen being independent of all previous choices. The bishop starts at the bottom left-hand corner of the board.

If is the position of the bishop at time , show that is a reversible Markov chain, whose statespace you should specify. Find the invariant distribution of this Markov chain.

What is the expected number of moves the bishop will make before first returning to its starting square?

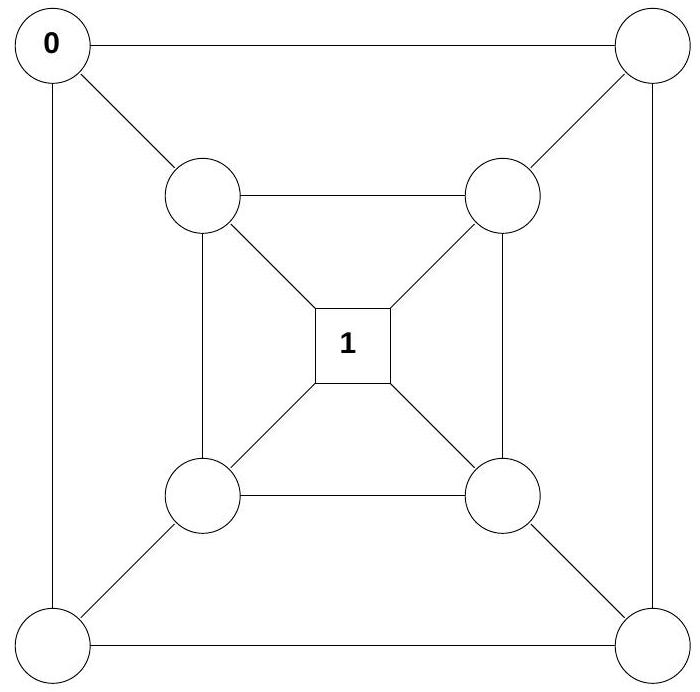

Paper 1, Section II, H

Part IB, 2009A gerbil is introduced into a maze at the node labelled 0 in the diagram. It roams at random through the maze until it reaches the node labelled 1. At each vertex, it chooses to move to one of the neighbouring nodes with equal probability, independently of all other choices. Find the mean number of moves required for the gerbil to reach node 1 .

Suppose now that the gerbil is intelligent, in that when it reaches a node it will not immediately return to the node from which it has just come, choosing with equal probability from all other neighbouring nodes. Express the movement of the gerbil in terms of a Markov chain whose states and transition probabilities you should specify. Find the mean number of moves until the intelligent gerbil reaches node 1 . Compare with your answer to the first part, and comment briefly.

Paper 2, Section II, H

Part IB, 2009Suppose that is a non-empty subset of the statespace of a Markov chain with transition matrix , and let , with the convention that inf . If , show that the equations

(a)

are satisfied by .

If satisfies (a), prove that also satisfies

where

By interpreting the transition matrix , prove that is the minimal solution to the equations (a), (b).

Now suppose that is irreducible. Prove that is recurrent if and only if the only solutions to (a) are constant functions.

Paper 3, Section I, E

Part IB, 2010An intrepid tourist tries to ascend Springfield's famous infinite staircase on an icy day. When he takes a step with his right foot, he reaches the next stair with probability , otherwise he falls down and instantly slides back to the bottom with probability . Similarly, when he steps with his left foot, he reaches the next stair with probability , or slides to the bottom with probability . Assume that he always steps first with his right foot when he is at the bottom, and alternates feet as he ascends. Let be his position after his th step, so that when he is on the stair , where 0 is the bottom stair.

(a) Specify the transition probabilities for the Markov chain for any .

(b) Find the equilibrium probabilities , for . [Hint:

(c) Argue that the chain is irreducible and aperiodic and evaluate the limit

for each .

Paper 4, Section I, E

Part IB, 2010Consider a Markov chain with state space and transition probabilities given by the following table.

\begin{tabular}{c|cccc} & & & & \ \hline & & & & 0 \ & 0 & & 0 & \ & & 0 & & \ & 0 & & 0 & \end{tabular}

By drawing an appropriate diagram, determine the communicating classes of the chain, and classify them as either open or closed. Compute the following transition and hitting probabilities:

for a fixed

for some .

Paper 1, Section II, E

Part IB, 2010Let be a Markov chain.

(a) What does it mean to say that a state is positive recurrent? How is this property related to the equilibrium probability ? You do not need to give a full proof, but you should carefully state any theorems you use.

(b) What is a communicating class? Prove that if states and are in the same communicating class and is positive recurrent then is positive recurrent also.

A frog is in a pond with an infinite number of lily pads, numbered She hops from pad to pad in the following manner: if she happens to be on pad at a given time, she hops to one of pads with equal probability.

(c) Find the equilibrium distribution of the corresponding Markov chain.

(d) Now suppose the frog starts on pad and stops when she returns to it. Show that the expected number of times the frog hops is ! where What is the expected number of times she will visit the lily pad ?

Paper 2, Section II, E

Part IB, 2010Let be a simple, symmetric random walk on the integers , with and . For each integer , let . Show that is a stopping time.

Define a random variable by the rule

Show that is also a simple, symmetric random walk.

Let . Explain why for . By using the process constructed above, show that, for ,

and thus

Hence compute

when and are positive integers with . [Hint: if is even, then must be even, and if is odd, then must be odd.]

Paper 3, Section I, H

Part IB, 2011Let be a Markov chain with state space .

(i) What does it mean to say that has the strong Markov property? Your answer should include the definition of the term stopping time.

(ii) Show that

for a state . You may use without proof the fact that has the strong Markov property.

Paper 4, Section I, H

Part IB, 2011Let be a Markov chain on a state space , and let .

(i) What does the term communicating class mean in terms of this chain?

(ii) Show that .

(iii) The period of a state is defined to be

Show that if and are in the same communicating class and , then divides .

Paper 1, Section II, H

Part IB, 2011Let be the transition matrix for an irreducible Markov chain on the finite state space .

(i) What does it mean to say is the invariant distribution for the chain?

(ii) What does it mean to say the chain is in detailed balance with respect to ?

(iii) A symmetric random walk on a connected finite graph is the Markov chain whose state space is the set of vertices of the graph and whose transition probabilities are

where is the number of vertices adjacent to vertex . Show that the random walk is in detailed balance with respect to its invariant distribution.

(iv) Let be the invariant distribution for the transition matrix , and define an inner product for vectors by the formula

Show that the equation

holds for all vectors if and only if the chain is in detailed balance with respect to . [Here means .]

Paper 2, Section II, H

Part IB, 2011(i) Let be a Markov chain on the finite state space with transition matrix . Fix a subset , and let

Fix a function on such that for all , and let

where by convention. Show that

(ii) A flea lives on a polyhedron with vertices, labelled . It hops from vertex to vertex in the following manner: if one day it is on vertex , the next day it hops to one of the vertices labelled with equal probability, and it dies upon reaching vertex 1. Let be the position of the flea on day . What are the transition probabilities for the Markov chain ?

(iii) Let be the number of days the flea is alive, and let

where is a real number such that . Show that and

for . Conclude that

[Hint. Use part (i) with and a well-chosen function . ]

(iv) Show that

Paper 3, Section I, H

Part IB, 2012A runner owns pairs of running shoes and runs twice a day. In the morning she leaves her house by the front door, and in the evening she leaves by the back door. On starting each run she looks for shoes by the door through which she exits, and runs barefoot if none are there. At the end of each run she is equally likely to return through the front or back doors. She removes her shoes (if any) and places them by the door. In the morning of day 1 all shoes are by the back door so she must run barefoot.

Let be the probability that she runs barefoot on the morning of day . What conditions are satisfied in this problem which ensure exists? Show that its value is .

Find the expected number of days that will pass until the first morning that she finds all pairs of shoes at her front door.

Paper 4, Section I, H

Part IB, 2012Let be an irreducible Markov chain with . Define the meaning of the statements:

(i) state is transient,

(ii) state is aperiodic.

Give a criterion for transience that can be expressed in terms of the probabilities .

Prove that if a state is transient then all states are transient.

Prove that if a state is aperiodic then all states are aperiodic.

Suppose that unless is divisible by 3 . Given any other state , prove that unless is divisible by 3 .

Paper 1, Section II, 20H

Part IB, 2012A Markov chain has as its state space the integers, with

and otherwise. Assume .

Let if this is finite, and otherwise. Let be the total number of hits on 0 , and let be the total number of hits on 0 within times . Let

(i) Quoting an appropriate theorem, find, for every , the value of .

(ii) Show that if is any non-negative solution to the system of equations

then for all and .

(iii) Show that and .

(iv) Explain why for .

(v) Find for all .

Paper 2, Section II, H

Part IB, 2012Let be the symmetric random walk on vertices of a connected graph. At each step this walk jumps from the current vertex to a neighbouring vertex, choosing uniformly amongst them. Let . For each let and . Stating any theorems that you use:

(i) Prove that the invariant distribution satisfies detailed balance.

(ii) Use reversibility to explain why for all .

Consider a symmetric random walk on the graph shown below.

(iii) Find .

(iv) The removal of any edge leaves two disjoint components, one which includes and one which includes . Prove that , where is the number of edges in the component that contains .

(v) Show that for all .

Paper 4, Section I, H

Part IB, 2013Suppose is the transition matrix of an irreducible recurrent Markov chain with state space . Show that if is an invariant measure and for some , then for all .

Let

Give a meaning to and explain why .

Suppose is an invariant measure with . Prove that for all .

Paper 3, Section I, H

Part IB, 2013Prove that if a distribution is in detailed balance with a transition matrix then it is an invariant distribution for .

Consider the following model with 2 urns. At each time, one of the following happens:

with probability a ball is chosen at random and moved to the other urn (but nothing happens if both urns are empty);

with probability a ball is chosen at random and removed (but nothing happens if both urns are empty);

with probability a new ball is added to a randomly chosen urn,

where and . State denotes that urns 1,2 contain and balls respectively. Prove that there is an invariant measure

Find the proportion of time for which there are balls in the system.

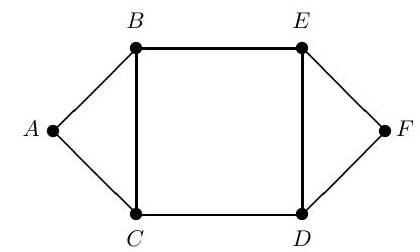

Paper 1, Section II, 20H

Part IB, 2013A Markov chain has state space and transition matrix

where the rows correspond to , respectively. Show that this Markov chain is equivalent to a random walk on some graph with 6 edges.

Let denote the mean first passage time from to .

(i) Find and .

(ii) Given , find the expected number of steps until the walk first completes a step from to .

(iii) Suppose the distribution of is . Let be the least such that appears as a subsequence of . By comparing the distributions of and show that and that

Paper 2, Section II, H

Part IB, 2013(i) Suppose is an irreducible Markov chain and for some . Prove that and that

(ii) Let be a symmetric random walk on the lattice. Prove that is recurrent. You may assume, for ,

(iii) A princess and monster perform independent random walks on the lattice. The trajectory of the princess is the symmetric random walk . The monster's trajectory, denoted , is a sleepy version of an independent symmetric random walk . Specifically, given an infinite sequence of integers , the monster sleeps between these times, so . Initially, and . The princess is captured if and only if at some future time she and the monster are simultaneously at .

Compare the capture probabilities for an active monster, who takes for all , and a sleepy monster, who takes spaced sufficiently widely so that

Paper 4, Section I, H

Part IB, 2014Let be a homogeneous Markov chain with state space and transition .

(a) Let Show that is a Markov chain and give its transition matrix. If , find in terms of the and the .

[Results from the course may be quoted without proof, provided they are clearly stated.]

(b) Suppose that and . Let , In terms of the , find

(i) and

(ii) .

What can you conclude about whether or not is a Markov chain?

Paper 3, Section I, H

Part IB, 2014Let be a homogeneous Markov chain with state space . For in let denote the -step transition probability .

(i) Express the -step transition probability in terms of the -step and -step transition probabilities.

(ii) Write if there exists such that , and if and . Prove that if and then either both and are recurrent or both and are transient. [You may assume that a state is recurrent if and only if , and otherwise is transient.]

(iii) A Markov chain has state space and transition matrix

For each state , determine whether is recurrent or transient. [Results from the course may be quoted without proof, provided they are clearly stated.]

Paper 1, Section II, 20H

Part IB, 2014Consider a homogeneous Markov chain with state space and transition . For a state , define the terms aperiodic, positive recurrent and ergodic.

Let and suppose that for we have and

where . Show that this Markov chain is irreducible.

Let be the first passage time to 0 . Find and show that state 0 is ergodic.

Find the invariant distribution for this Markov chain. Write down:

(i) the mean recurrence time for state ;

(ii) .

[Results from the course may be quoted without proof, provided they are clearly stated.]

Paper 2, Section II, H

Part IB, 2014Let be a homogeneous Markov chain with state space and transition matrix . For , let

Prove that is the minimal non-negative solution to the equations

Three people and play a series of two-player games. In the first game, two people play and the third person sits out. Any subsequent game is played between the winner of the previous game and the person sitting out the previous game. The overall winner of the series is the first person to win two consecutive games. The players are evenly matched so that in any game each of the two players has probability of winning the game, independently of all other games. For , let be the ordered pair consisting of the winners of games and . Thus the state space is , and, for example, if wins the first game and wins the second.

The first game is between and . Treating and as absorbing states, or otherwise, find the probability of winning the series for each of the three players.

Paper 4, Section I, H

Part IB, 2015Let be independent identically distributed random variables with . Let , where is a constant. For each of the following cases, determine whether or not is a Markov chain: (a) ; (b) ; (c) .

In each case, if is a Markov chain, explain why, and give its state space and transition matrix; if it is not a Markov chain, give an example to demonstrate that it is not.

Paper 3, Section I, H

Part IB, 2015Define what is meant by a communicating class and a closed class in a Markov chain.

A Markov chain with state space has transition matrix

Write down the communicating classes for this Markov chain and state whether or not each class is closed.

If , let be the smallest such that . Find for and . Describe the evolution of the chain if .

Paper 2, Section II, H

Part IB, 2015(a) What does it mean for a transition matrix and a distribution to be in detailed balance? Show that if and are in detailed balance then .

(b) A mathematician owns bicycles, which she sometimes uses for her journey from the station to College in the morning and for the return journey in the evening. If it is fine weather when she starts a journey, and if there is a bicycle available at the current location, then she cycles; otherwise she takes the bus. Assume that with probability , , it is fine when she starts a journey, independently of all other journeys. Let denote the number of bicycles at the current location, just before the mathematician starts the th journey.

(i) Show that is a Markov chain and write down its transition matrix.

(ii) Find the invariant distribution of the Markov chain.

(iii) Show that the Markov chain satisfies the necessary conditions for the convergence theorem for Markov chains and find the limiting probability that the mathematician's th journey is by bicycle.

[Results from the course may be used without proof provided that they are clearly stated.]

Paper 1, Section II, H

Part IB, 2015Consider a particle moving between the vertices of the graph below, taking steps along the edges. Let be the position of the particle at time . At time the particle moves to one of the vertices adjoining , with each of the adjoining vertices being equally likely, independently of previous moves. Explain briefly why is a Markov chain on the vertices. Is this chain irreducible? Find an invariant distribution for this chain.

Suppose that the particle starts at . By adapting the transition matrix, or otherwise, find the probability that the particle hits vertex before vertex .

Find the expected first passage time from to given no intermediate visit to .

[Results from the course may be used without proof provided that they are clearly stated.]

Paper 4, Section I, H

Part IB, 2016Consider two boxes, labelled and B. Initially, there are no balls in box and balls in box B. Each minute later, one of the balls is chosen uniformly at random and is moved to the opposite box. Let denote the number of balls in box A at time , so that .

(a) Find the transition probabilities of the Markov chain and show that it is reversible in equilibrium.

(b) Find , where is the next time that all balls are again in box .

Paper 3, Section I, H

Part IB, 2016Let be a Markov chain such that . Prove that

where . [You may use the strong Markov property without proof.]

Paper 2, Section II, H

Part IB, 2016(a) Prove that every open communicating class of a Markov chain is transient. Prove that every finite transient communicating class is open. Give an example of a Markov chain with an infinite transient closed communicating class.

(b) Consider a Markov chain with state space and transition probabilities given by the matrix

(i) Compute for a fixed .

(ii) Compute for some .

(iii) Show that converges as , and determine the limit.

[Results from lectures can be used without proof if stated carefully.]

Paper 1, Section II, H

Part IB, 2016Let be a simple symmetric random walk on the integers, starting at .

(a) What does it mean to say that a Markov chain is irreducible? What does it mean to say that an irreducible Markov chain is recurrent? Show that is irreducible and recurrent.

[Hint: You may find it helpful to use the limit

You may also use without proof standard necessary and sufficient conditions for recurrence.]

(b) What does it mean to say that an irreducible Markov chain is positive recurrent? Determine, with proof, whether is positive recurrent.

(c) Let

be the first time the chain returns to the origin. Compute for a fixed number .

Paper 3, Section I, H

Part IB, 2017(a) What does it mean to say that a Markov chain is reversible?

(b) Let be a finite connected graph on vertices. What does it mean to say that is a simple random walk on ?

Find the unique invariant distribution of .

Show that is reversible when .

[You may use, without proof, results about detailed balance equations, but you should state them clearly.]

Paper 4, Section I,

Part IB, 2017Prove that the simple symmetric random walk on is transient.

[Any combinatorial inequality can be used without proof.]

Paper 1, Section II, H

Part IB, 2017A rich and generous man possesses pounds. Some poor cousins arrive at his mansion. Being generous he decides to give them money. On day 1 , he chooses uniformly at random an integer between and 1 inclusive and gives it to the first cousin. Then he is left with pounds. On day 2 , he chooses uniformly at random an integer between and 1 inclusive and gives it to the second cousin and so on. If then he does not give the next cousin any money. His choices of the uniform numbers are independent. Let be his fortune at the end of day .

Show that is a Markov chain and find its transition probabilities.

Let be the first time he has 1 pound left, i.e. . Show that

Paper 2, Section II, H

Part IB, 2017Let be i.i.d. random variables with values in and . Moreover, suppose that the greatest common divisor of is 1 . Consider the following process

(a) Show that is a Markov chain and find its transition probabilities.

(b) Let . Find .

(c) Find the limit as of . State carefully any theorems from the course that you are using.

Paper 3, Section I, H

Part IB, 2018The mathematics course at the University of Barchester is a three-year one. After the end-of-year examinations there are three possibilities:

(i) failing and leaving (probability );

(ii) taking that year again (probability );

(iii) going on to the next year (or graduating, if the current year is the third one) (probability ).

Thus there are five states for a student year, year, year, left without a degree, graduated).

Write down the transition matrix. Classify the states, assuming . Find the probability that a student will eventually graduate.

Paper 4, Section I, H

Part IB, 2018Let be the transition matrix for an irreducible Markov chain on the finite state space .

(a) What does it mean to say that a distribution is the invariant distribution for the chain?

(b) What does it mean to say that the chain is in detailed balance with respect to a distribution ? Show that if the chain is in detailed balance with respect to a distribution then is the invariant distribution for the chain.

(c) A symmetric random walk on a connected finite graph is the Markov chain whose state space is the set of vertices of the graph and whose transition probabilities are

where is the number of vertices adjacent to vertex . Show that the random walk is in detailed balance with respect to its invariant distribution.

Paper 1, Section II, H

Part IB, 2018A coin-tossing game is played by two players, and . Each player has a coin and the probability that the coin tossed by player comes up heads is , where . The players toss their coins according to the following scheme: tosses first and then after each head, pays one pound and has the next toss, while after each tail, pays one pound and has the next toss.

Define a Markov chain to describe the state of the game. Find the probability that the game ever returns to a state where neither player has lost money.

Paper 2, Section II, H

Part IB, 2018For a finite irreducible Markov chain, what is the relationship between the invariant probability distribution and the mean recurrence times of states?

A particle moves on the vertices of the hypercube, , in the following way: at each step the particle is equally likely to move to each of the adjacent vertices, independently of its past motion. (Two vertices are adjacent if the Euclidean distance between them is one.) The initial vertex occupied by the particle is . Calculate the expected number of steps until the particle

(i) first returns to ,

(ii) first visits ,

(iii) first visits .

Paper 4, Section I, H

Part IB, 2019For a Markov chain on a state space with , we let for be the probability that when .

(a) Let be a Markov chain. Prove that if is recurrent at a state , then . [You may use without proof that the number of returns of a Markov chain to a state when starting from has the geometric distribution.]

(b) Let and be independent simple symmetric random walks on starting from the origin 0 . Let . Prove that and deduce that . [You may use without proof that for all and , and that is recurrent at 0.]

Paper 3, Section I, H

Part IB, 2019Suppose that is a Markov chain with state space .

(a) Give the definition of a communicating class.

(b) Give the definition of the period of a state .

(c) Show that if two states communicate then they have the same period.

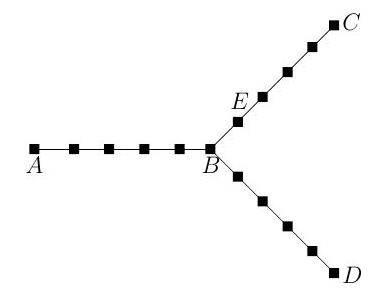

Paper 2, Section II, H

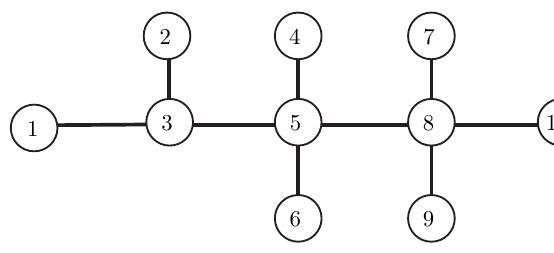

Part IB, 2019Fix and let be the graph consisting of a copy of joining vertices and , a copy of joining vertices and , and a copy of joining vertices and . Let be the vertex adjacent to on the segment from to . Shown below is an illustration of in the case . The vertices are solid squares and edges are indicated by straight lines.

Let be a simple random walk on . In other words, in each time step, moves to one of its neighbours with equal probability. Assume that .

(a) Compute the expected amount of time for to hit .

(b) Compute the expected amount of time for to hit . [Hint: first show that the expected amount of time for to go from to satisfies where is the expected return time of to when starting from .]

(c) Compute the expected amount of time for to hit . [Hint: for each , let be the vertex which is places to the right of on the segment from to . Derive an equation for the expected amount of time for to go from to .]

Justify all of your answers.

Paper 1, Section II, H

Part IB, 2019Let be a transition matrix for a Markov chain on a state space with elements with . Assume that the Markov chain is aperiodic and irreducible and let be its unique invariant distribution. Assume that .

(a) Let . Show that .

(b) Let . Compute in terms of an explicit function of .

(c) Suppose that a cop and a robber start from a common state chosen from . The robber then takes one step according to and stops. The cop then moves according to independently of the robber until the cop catches the robber (i.e., the cop visits the state occupied by the robber). Compute the expected amount of time for the cop to catch the robber.

Paper 2, Section I, H

Part IB, 2020Let be a Markov chain with state space and transition matrix

where . Compute . Find the value of .

Paper 1, Section II, H

Part IB, 2020Let be a Markov chain with transition matrix . What is a stopping time of ? What is the strong Markov property?

A porter is trying to apprehend a student who is walking along a long narrow path at night. Being unaware of the porter, the student's location at time evolves as a simple symmetric random walk on . The porter's initial location is units to the right of the student, so where . The future locations of the porter evolve as follows: The porter moves to the left (so ) with probability , and to the right with probability whenever . When , the porter's probability of moving left changes to , and the probability of moving right is .

(a) By setting up an appropriate Markov chain, show that for , the expected time for the porter to be a distance away from the student is .

(b) Show that the expected time for the porter to catch the student, i.e. for their locations to coincide, is

[You may use without proof the fact that the time for the porter to catch the student is finite with probability 1 for any .]

Paper 3 , Section I, H

Part IB, 2021Consider a Markov chain on a state space .

(a) Define the notion of a communicating class. What does it mean for a communicating class to be closed?

(b) Taking , find the communicating classes associated with the transition matrix given by

and identify which are closed.

(c) Find the expected time for the Markov chain with transition matrix above to reach 6 starting from 1 .

Paper 4, Section I, H

Part IB, 2021Show that the simple symmetric random walk on is recurrent.

Three particles perform independent simple symmetric random walks on . What is the probability that they are all simultaneously at 0 infinitely often? Justify your answer.

[You may assume without proof that there exist constants such that for all positive integers

Paper 1, Section II, 19H

Part IB, 2021Let be a Markov chain with transition matrix . What is a stopping time of ? What is the strong Markov property?

The exciting game of 'Unopoly' is played by a single player on a board of 4 squares. The player starts with (where ). During each turn, the player tosses a fair coin and moves one or two places in a clockwise direction according to whether the coin lands heads or tails respectively. The player collects each time they pass (or land on) square 1. If the player lands on square 3 however, they immediately lose and go back to square 2. The game continues indefinitely unless the player is on square 2 with , in which case the player loses the game and the game ends.

(a) By setting up an appropriate Markov chain, show that if the player is at square 2 with , where , the probability that they are ever at square 2 with is

(b) Find the probability of losing the game when the player starts on square 1 with , where .

[Hint: Take the state space of your Markov chain to be .]

Paper 2, Section II, 18H

Part IB, 2021Let be a transition matrix on state space . What does it mean for a distribution to be an invariant distribution? What does it mean for and to be in detailed balance? Show that if and are in detailed balance, then is an invariant distribution.

(a) Assuming that an invariant distribution exists, state the relationship between this and

(i) the expected return time to a state ;

(ii) the expected time spent in a state between visits to a state .

(b) Let be a Markov chain with transition matrix where . The transition probabilities are given for by

where . For let . Compute the following, justifying your answers:

(i) The expected time spent in states between visits to state 1 ;

(ii) The expected time taken to return to state 1 , starting from 1 ;

(iii) The expected time taken to hit state 0 starting from

A1.1 B1.1

Part II, 2001(i) Let be an irreducible Markov chain on the finite state space with transition matrix and invariant distribution . What does it mean to say that is reversible in equilibrium?

Show that is reversible in equilibrium if and only if for all .

(ii) A finite connected graph has vertex set and edge set , and has neither loops nor multiple edges. A particle performs a random walk on , moving at each step to a randomly chosen neighbour of the current position, each such neighbour being picked with equal probability, independently of all previous moves. Show that the unique invariant distribution is given by where is the degree of vertex .

A rook performs a random walk on a chessboard; at each step, it is equally likely to make any of the moves which are legal for a rook. What is the mean recurrence time of a corner square. (You should give a clear statement of any general theorem used.)

[A chessboard is an square grid. A legal move is one of any length parallel to the axes.]

A2.1

Part II, 2001(i) The fire alarm in Mill Lane is set off at random times. The probability of an alarm during the time-interval is where the 'intensity function' may vary with the time . Let be the number of alarms by time , and set . Show, subject to reasonable extra assumptions to be stated clearly, that satisfies

Deduce that has the Poisson distribution with parameter .

(ii) The fire alarm in Clarkson Road is different. The number of alarms by time is such that

where , and . Show, subject to suitable extra conditions, that satisfies a set of differential-difference equations to be specified. Deduce without solving these equations in their entirety that has mean , and find the variance of .

A3.1 B3.1

Part II, 2001(i) Explain what is meant by the transition semigroup of a Markov chain in continuous time. If the state space is finite, show under assumptions to be stated clearly, that for some matrix . Show that a distribution satisfies if and only if for all , and explain the importance of such .

(ii) Let be a continuous-time Markov chain on the state space with generator

Show that the transition semigroup is given by

where .

For , let

For a continuous-time chain , let be a matrix with entry

, for . Show that there is a chain with if and only if .

A4.1

Part II, 2001Write an essay on the convergence to equilibrium of a discrete-time Markov chain on a countable state-space. You should include a discussion of the existence of invariant distributions, and of the limit theorem in the non-null recurrent case.

A1.1 B1.1

Part II, 2002(i) We are given a finite set of airports. Assume that between any two airports, and , there are flights in each direction on every day. A confused traveller takes one flight per day, choosing at random from all available flights. Starting from , how many days on average will pass until the traveller returns again to ? Be careful to allow for the case where there may be no flights at all between two given airports.

(ii) Consider the infinite tree with root , where, for all , all vertices at distance from have degree 3 , and where all other vertices (except ) have degree 2 . Show that the random walk on is recurrent.

A2.1

Part II, 2002(i) In each of the following cases, the state-space and non-zero transition rates of a continuous-time Markov chain are given. Determine in which cases the chain is explosive.

(ii) Children arrive at a see-saw according to a Poisson process of rate 1 . Initially there are no children. The first child to arrive waits at the see-saw. When the second child arrives, they play on the see-saw. When the third child arrives, they all decide to go and play on the merry-go-round. The cycle then repeats. Show that the number of children at the see-saw evolves as a Markov Chain and determine its generator matrix. Find the probability that there are no children at the see-saw at time .

Hence obtain the identity

A3.1 B3.1

Part II, 2002(i) Consider the continuous-time Markov chain on with generator matrix

Compute the probability, starting from state 3 , that hits state 2 eventually.

Deduce that

[Justification of standard arguments is not expected.]

(ii) A colony of cells contains immature and mature cells. Each immature cell, after an exponential time of parameter 2, becomes a mature cell. Each mature cell, after an exponential time of parameter 3, divides into two immature cells. Suppose we begin with one immature cell and let denote the expected number of immature cells at time . Show that

A4.1

Part II, 2002Write an essay on the long-time behaviour of discrete-time Markov chains on a finite state space. Your essay should include discussion of the convergence of probabilities as well as almost-sure behaviour. You should also explain what happens when the chain is not irreducible.

A1.1 B1.1

Part II, 2003(i) Let be a simple symmetric random walk in , starting from , and set . Determine the quantities and and .

(ii) Let be a discrete-time Markov chain with state-space and transition matrix . What does it mean to say that a state is recurrent? Prove that is recurrent if and only if , where denotes the entry in .

Show that the simple symmetric random walk in is recurrent.

A2.1

Part II, 2003(i) What is meant by a Poisson process of rate ? Show that if and are independent Poisson processes of rates and respectively, then is also a Poisson process, and determine its rate.

(ii) A Poisson process of rate is observed by someone who believes that the first holding time is longer than all subsequent holding times. How long on average will it take before the observer is proved wrong?

A3.1 B3.1

Part II, 2003(i) Consider the continuous-time Markov chain with state-space and -matrix

Set

and

Determine which, if any, of the processes and are Markov chains.

(ii) Find an invariant distribution for the chain given in Part (i). Suppose . Find, for all , the probability that .

A4.1

Part II, 2003Consider a pack of cards labelled . We repeatedly take the top card and insert it uniformly at random in one of the 52 possible places, that is, either on the top or on the bottom or in one of the 50 places inside the pack. How long on average will it take for the bottom card to reach the top?

Let denote the probability that after iterations the cards are found in increasing order. Show that, irrespective of the initial ordering, converges as , and determine the limit . You should give precise statements of any general results to which you appeal.

Show that, at least until the bottom card reaches the top, the ordering of cards inserted beneath it is uniformly random. Hence or otherwise show that, for all ,

A1.1 B1.1

Part II, 2004(i) Give the definitions of a recurrent and a null recurrent irreducible Markov chain.

Let be a recurrent Markov chain with state space and irreducible transition matrix . Prove that the vectors , with entries and

are -invariant:

(ii) Let be the birth and death process on with the following transition probabilities:

By relating to the symmetric simple random walk on , or otherwise, prove that is a recurrent Markov chain. By considering invariant measures, or otherwise, prove that is null recurrent.

Calculate the vectors for the chain .

Finally, let and let be the number of visits to 1 before returning to 0 . Show that .

[You may use properties of the random walk or general facts about Markov chains without proof but should clearly state them.]

A2.1

Part II, 2004(i) Let be a proper subset of the finite state space of an irreducible Markov chain , whose transition matrix is partitioned as

If only visits to states in are recorded, we see a -valued Markov chain ; show that its transition matrix is

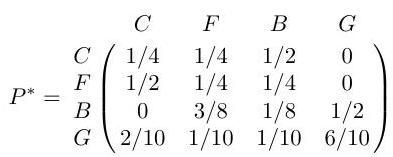

(ii) Local MP Phil Anderer spends his time in London in the Commons , in his flat , in the bar or with his girlfriend . Each hour, he moves from one to another according to the transition matrix , though his wife (who knows nothing of his girlfriend) believes that his movements are governed by transition matrix :

The public only sees Phil when he is in ; calculate the transition matrix which they believe controls his movements.

Each time the public Phil moves to a new location, he phones his wife; write down the transition matrix which governs the sequence of locations from which the public Phil phones, and calculate its invariant distribution.

Phil's wife notes down the location of each of his calls, and is getting suspicious - he is not at his flat often enough. Confronted, Phil swears his fidelity and resolves to dump his troublesome transition matrix, choosing instead

Will this deal with his wife's suspicions? Explain your answer.

A3.1 B3.1

Part II, 2004(i) Give the definition of the time-reversal of a discrete-time Markov chain . Define a reversible Markov chain and check that every probability distribution satisfying the detailed balance equations is invariant.

(ii) Customers arrive in a hairdresser's shop according to a Poisson process of rate . The shop has hairstylists and waiting places; each stylist is working (on a single customer) provided that there is a customer to serve, and any customer arriving when the shop is full (i.e. the numbers of customers present is ) is not admitted and never returns. Every admitted customer waits in the queue and then is served, in the first-come-first-served order (say), the service taking an exponential time of rate ; the service times of admitted customers are independent. After completing his/her service, the customer leaves the shop and never returns.

Set up a Markov chain model for the number of customers in the shop at time . Assuming , calculate the equilibrium distribution of this chain and explain why it is unique. Show that in equilibrium is time-reversible, i.e. has the same distribution as where , and .

A4.1

Part II, 2004(a) Give three definitions of a continuous-time Markov chain with a given -matrix on a finite state space: (i) in terms of holding times and jump probabilities, (ii) in terms of transition probabilities over small time intervals, and (iii) in terms of finite-dimensional distributions.

(b) A flea jumps clockwise on the vertices of a triangle; the holding times are independent exponential random variables of rate one. Find the eigenvalues of the corresponding -matrix and express transition probabilities , in terms of these roots. Deduce the formulas for the sums

in terms of the functions and .

Find the limits

What is the connection between the decompositions and